Join our Mailing List!

Join the mailing list to hear updates about the world or data science and exciting projects we are working on in machine learning, net zero and beyond.

DALL.E 2 is a new AI system that can generate realistic images and art from a text-based description in natural language developed by Open AI.

At SeerBI we were delighted to find we were given early research access to the AI by OpenAI which we have been using to its full extent for the last few months for everything from research to machine learning to a bit of fun.

By taking in a simple text-based input the AI uses natural language processing as well as a series of neural network layers applied via a diffusion model approach to generate images. The system can currently generate four images at a time to give variations based on the input.

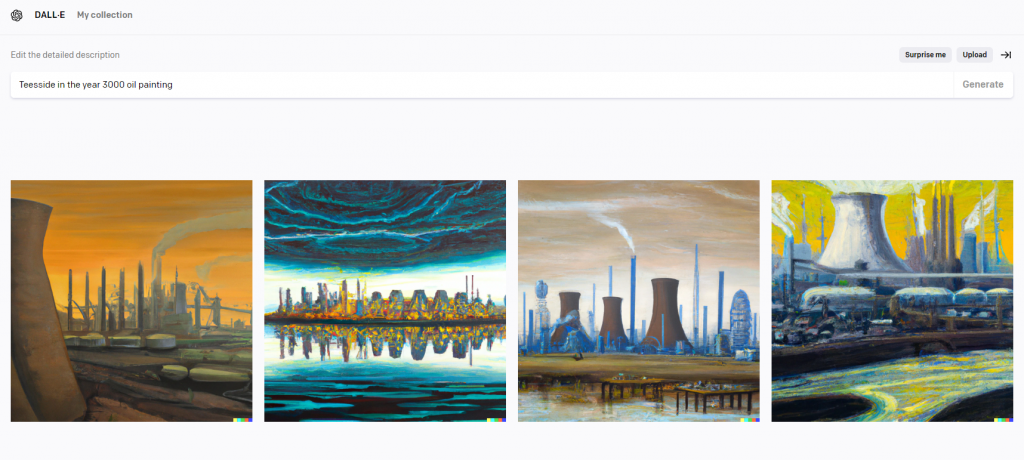

The system works best when the text input is specific such as a given art style like “oil painting” or “digital art” or “3D Render”. Even the featured image above was generated using the AI tool using the phrase “Teesside in the year 3000 oil painting”:

This model works by using Open AI’s previous neural network natural language processing based model CLIP that can identify images using natural language and adding a decoder layer which generates an image conditioned on the image embedding from CLIP with a diffusion model method. The images are made high quality using unsampler methods which ensure the resolution of the image is always 1024 x 1024 px and that a gaussian blur is applied to sharpen or blur the image as the neural networks decide.

The use cases of the DALL.E 2 model are expansive from the immediate generation of social media images for use in marketing, eliminating graphic design work needed to create images. The model can also generate photo-realistic images for use on social media posts like this generation of “an Italian vista with a galaxy clear in the sky”.

Another use case is prototyping designs or scenes for movies, video games or any other art eliminating the need for long design tasks and allowing prototyping to happen much faster. Here is an example of an idea for a video game “pixel art if a racoon in a street dressed as a superhero fighting a squirrel”. generated by the AI:

Illustrations for books or the visualisation of concepts are another impressive use case of the AI. For example, if a writer was writing a children’s book they could input their writing into the model with a selected art style to get illustrations from the model, saving on costs and time or just as a prototype to take to a designer to fully develop. Here is a line taken from the book Where The Wild Things Are: “The wild things roared their terrible roars and gnashed their terrible teeth and rolled their terrible eyes and showed their terrible claws” and here is it as an image generated by the AI:

As you can see there are numerous use cases of the model saving businesses and individuals time and money and this isn’t even touching on the artistic capabilities of the model or the extra tools of the model to allow text-driven photo editing or variation generation.

If you would like to learn more checkout OpenAI’s write-up of the project here:

If you would like us to generate an image for you send us your text to [email protected] and we will see what the AI comes up with!

For any other AI, Machine learning or data analysis needs contact us to Unlock Your Data:

Join the mailing list to hear updates about the world or data science and exciting projects we are working on in machine learning, net zero and beyond.

Fill in the form below and our team will be in touch regarding this service

07928510731

[email protected]

Victoria Road, Victoria House, TS13AP, Middlesbrough